White Blood Unicellular Classification

2019-03-21

- 1 Introduction

- 1.1 Motivation

- 1.2 load package

- 1.3 Dataset description

- 1.4 Description of the First Dataset

- 1.5 Exploration and Transformation of the first dataset

- 1.5.1 Transformation of

labels.csvfile - 1.5.2 Explore and Transform XML file

- 1.5.3 Function to extract x, y limits of WBC from xml files

- 1.5.4 Group all x, y limits of WBC into dataframe

- 1.5.5 Explore only images with multiple WBC

- 1.5.6 Merge all needed informations of images with multiple WBC into dataframe

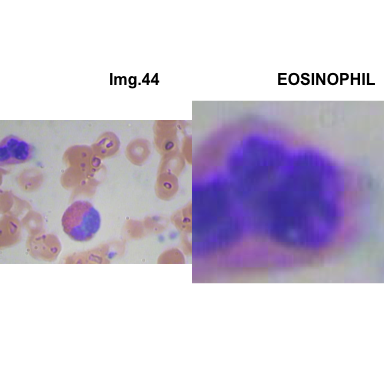

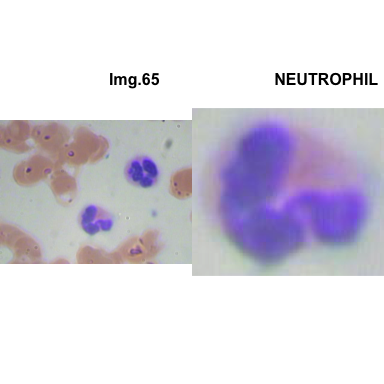

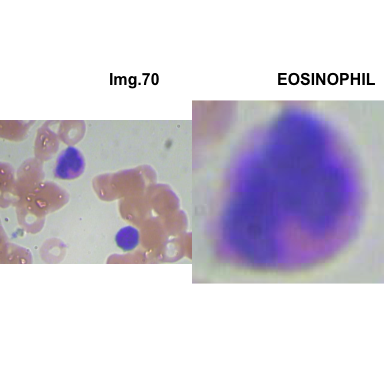

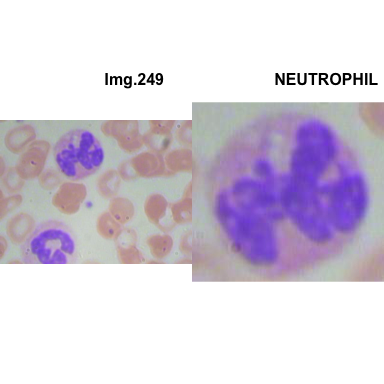

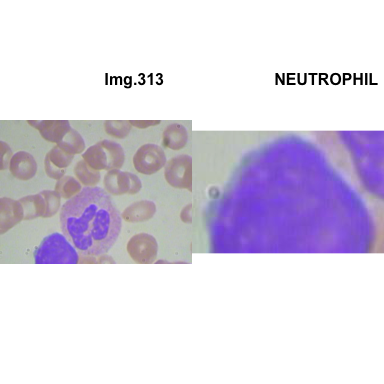

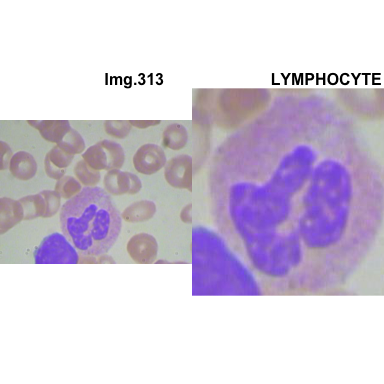

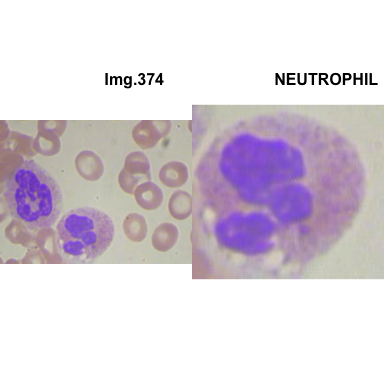

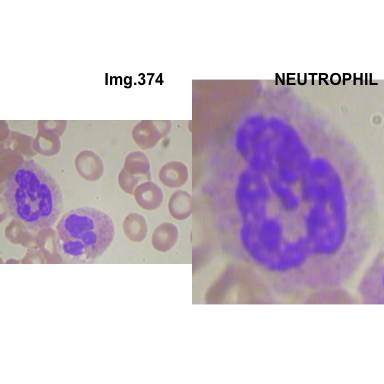

- 1.5.7 Function to plot cropped WBC from images

- 1.5.8 Merge all needed informations of all images in dataset-master into dataframe

- 1.5.9 Function used to crop WBC from images

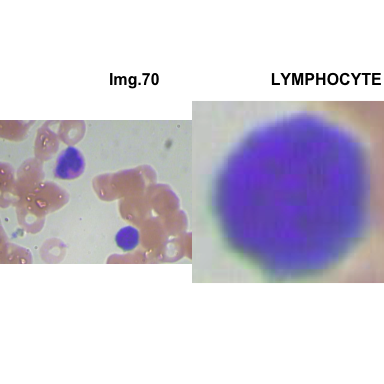

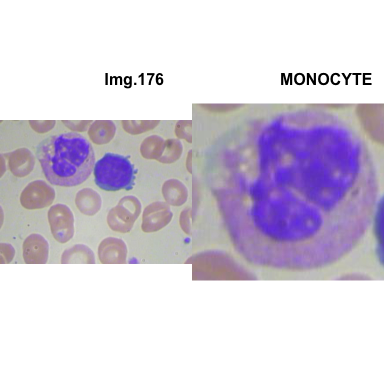

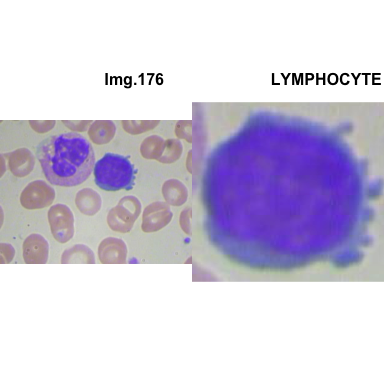

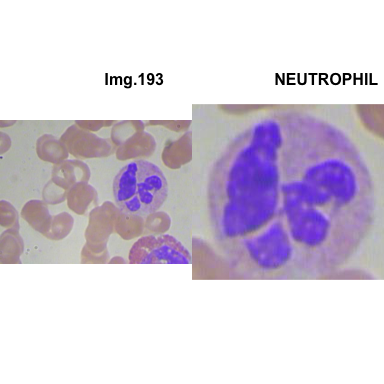

- 1.5.10 Glimpse of some crops

- 1.5.11 Dataset Augmentation

- 1.5.12 Plot image from a list of list

- 1.5.13 Deep learning using

mxnetPackage - 1.5.14 Set training parameters

- 1.5.15 Built a Model

- 1.5.16 Summary of the model

- 1.5.17 Test Model

- 1.5.18 Compute the means of identical labels, located in the diagonal

- 1.5.19 Visualize predicted class and compare them to original class from testing dataset

- 1.5.1 Transformation of

- 1.6 Description of the second dataset

1 Introduction

Medical Image Analysis is a widely used method to screen and diagnose diseases. Depending of the part or tissue of the body would check, we can subset imaging Technics into 2 big methods. First the methods that use rays, like x-ray, Nuclear Magnetic Resonance (NMR) or Scintigraphy. In general, the images generated by these methods are as a photographic film (black and white). The advantage of these methods is that we can observe body through tissues and have access to internal parts without chirurgy. The inconvenient is that these methods uses ionizing radiation (dangerous for health) and mainly expensive. There are at less two kaggle competition with this type of images: NIH Chest X-rays and Chest X-Ray Images (Pneumonia) The second kind of medical imaging is applied to all type of tissues or physiological fluid (plasma, blood). The samples are prepared on glass slide and stained with Hematoxylin and eosin stain (H&E). This technique is very old and widely used for several medical screening and diagnostics. H&E staining is not expensive but needs Human intervention and the processing takes a while. The interpretation of the images also needs multiple experts (pathologist) to visualize and diagnose slides mainly through microscopes. the analysis remains subjective and could differ between pathologists.

On the other hand, the H&E stains are routinely prepared by technicians who become overwhelmed by the increasing number of samples. Likewise, pathologists are over-worked by the microscopic observation and interpretation, causing overflow that may result in diagnostic error.

1.1 Motivation

A computational grading of slides using learning machine may help to make a pre-screening a hundred or thousand or slides before Human verification.

1.2 load package

1.3 Dataset description

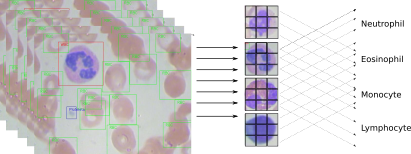

The images of this competition shows blood cell spread on glass slide and stained by H&E method. We can visualize red blood cell and immune cells. It is easy to make a difference between these two kind of cells. The coloration is not the same, red cells are red but immune cell are purple.

1.3.1 Glimpse of digital slide

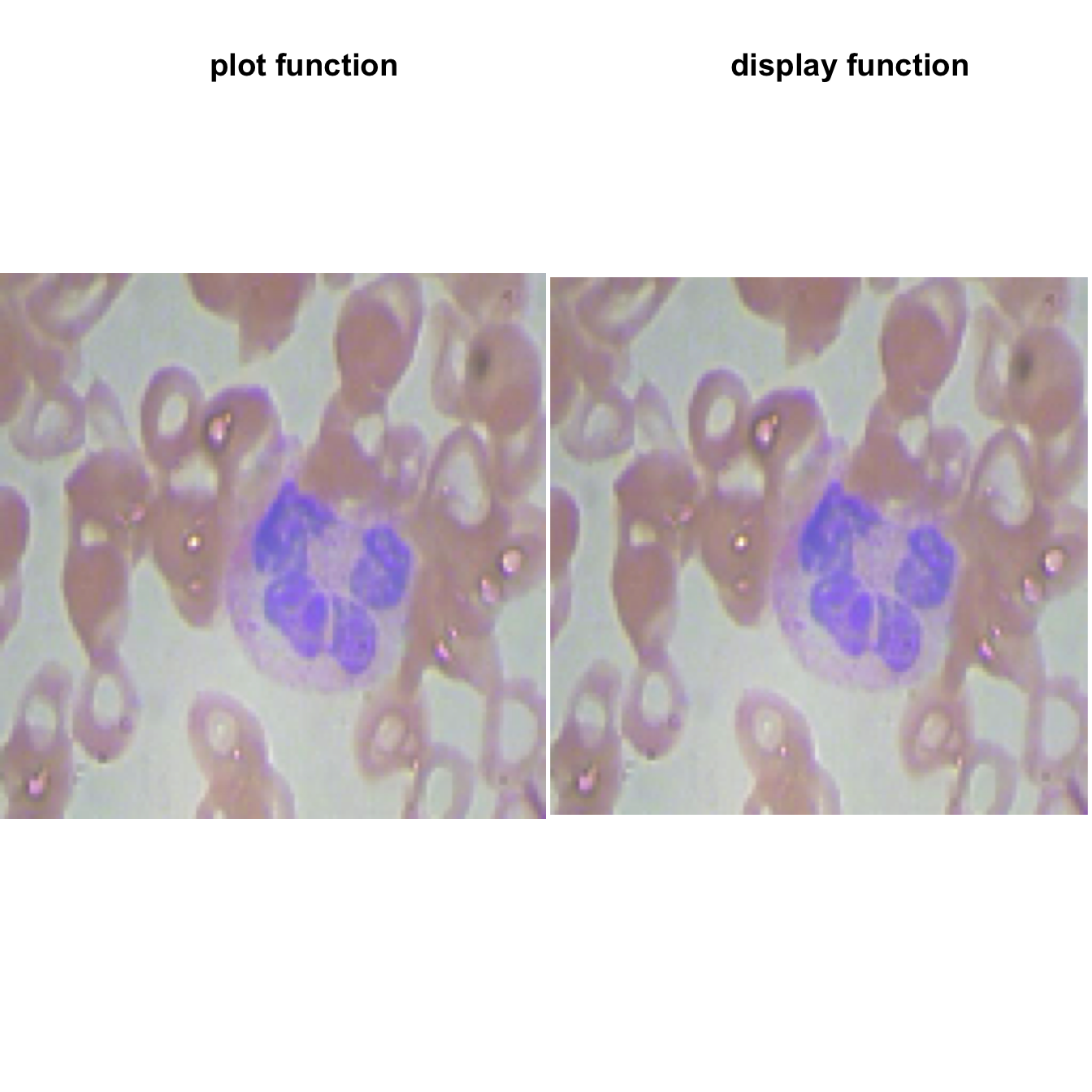

library(EBImage)

path_to_dataset <- "dataset-master/JPEGImages/"

img <- EBImage::readImage(paste0(path_to_dataset, "BloodImage_00000.jpg"))

img_resize = resize(img, w=128, h=128)

par(mfrow=c(1,2)) # , mai=c(0.1,0.1,0.2,0.1), oma=c(3,3,3,3)

plot(img_resize)

title( main = "plot function")

display(img_resize, method = "raster", all = TRUE, margin = 1)

title( main = "display function")

We observe one purple cell which is a white blood cell. The other cells are read blood cell.

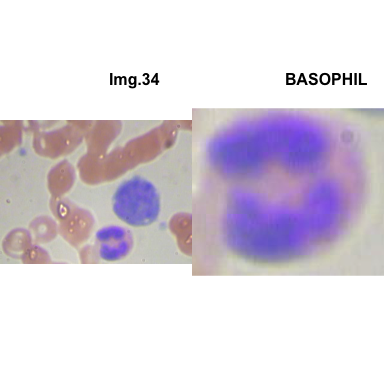

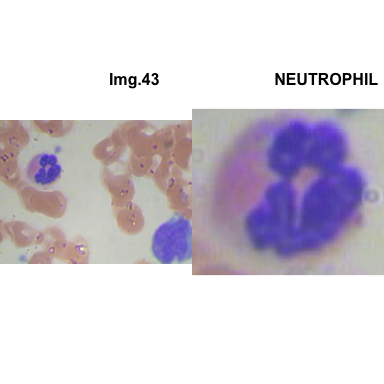

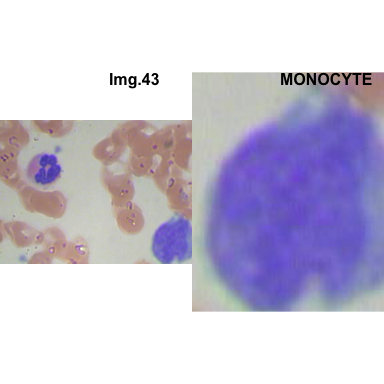

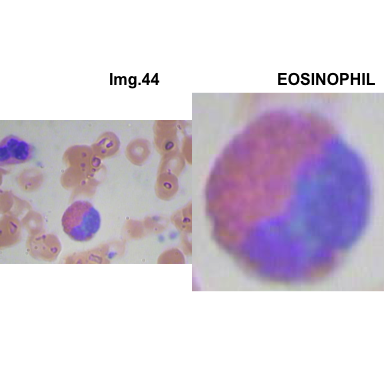

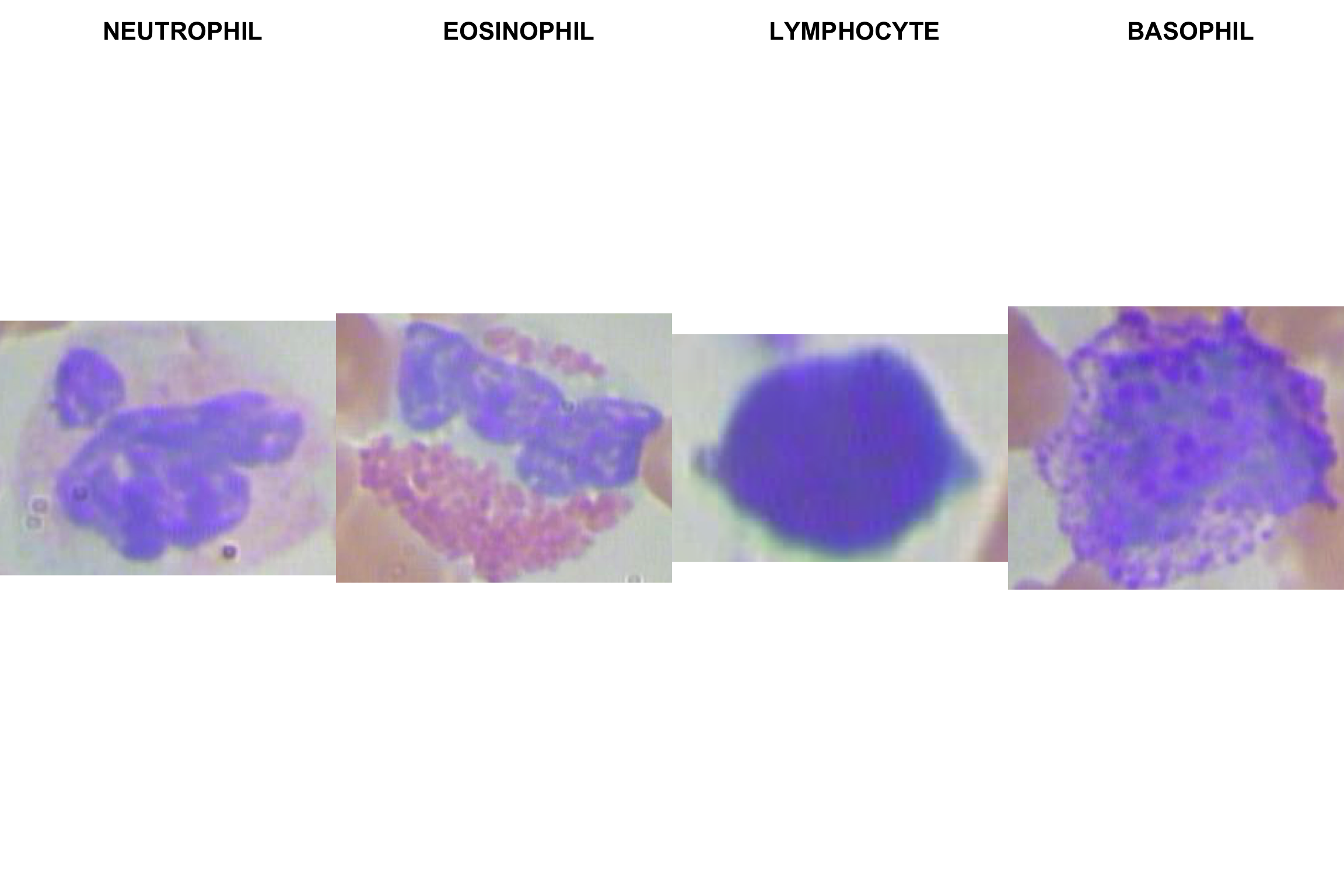

There are 4 types of immune cells, named also White Blood Cells (WBC).

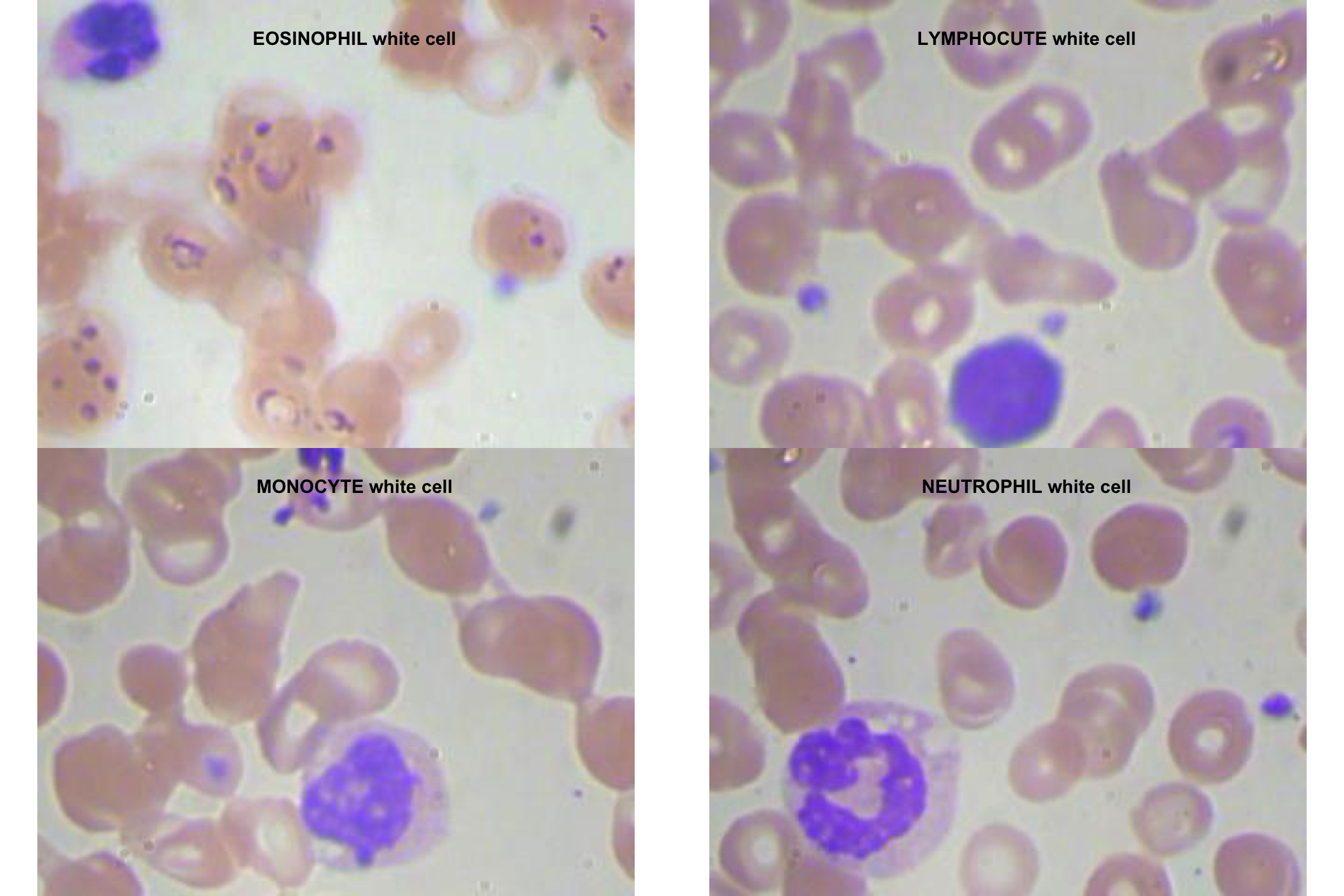

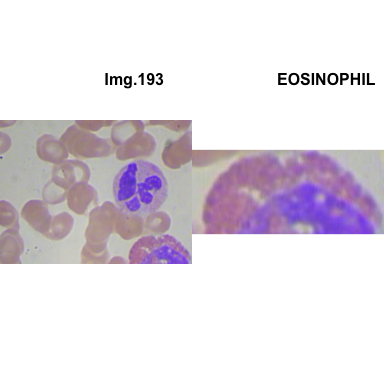

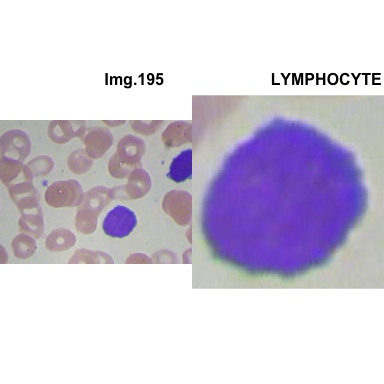

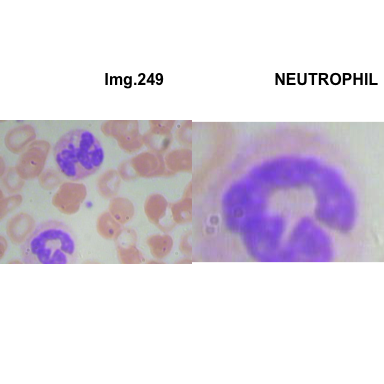

1.3.2 Glimpse of the 4 White Blood Cell types

path_to_dataset2 <- "dataset2-master/images/TEST_SIMPLE/"

img_eosinophil <- EBImage::readImage(paste0(path_to_dataset2, "EOSINOPHIL/_0_5239.jpeg"))

img_lymphocyte <- EBImage::readImage(paste0(path_to_dataset2, "LYMPHOCYTE/_0_3975.jpeg"))

img_monocyte <- EBImage::readImage(paste0(path_to_dataset2, "MONOCYTE/_0_5020.jpeg"))

img_neutrophil <- EBImage::readImage(paste0(path_to_dataset2, "NEUTROPHIL/_0_1966.jpeg"))

par(mfrow=c(2,2))

graphics::plot(img_eosinophil)

title(main = "EOSINOPHIL white cell")

plot(img_lymphocyte)

title(main = "LYMPHOCUTE white cell")

plot(img_monocyte)

title(main = "MONOCYTE white cell")

plot(img_neutrophil)

title(main = "NEUTROPHIL white cell")

1.4 Description of the First Dataset

The first dataset directory names dataset-master contains 3 folders:

- JPEGImages that has 366 jpeg images

- csv file that list the image label and the white cell type that has.

- XML annotation folder that frame the different kind of cells in each image (343 XML files).

- Green square for red blood cell (RB)

- Red square for white blood cell (WBC)

- Blue square for Platelets

1.5 Exploration and Transformation of the first dataset

1.5.1 Transformation of labels.csv file

Labels associate cells type. We can find Red Blood Cell (RBC), Platelets, and 4 types of White Blood Cells (WBC).

We renamed images as its files names. Some image have two kind of WBC.

We need to crop each cell from image and rang it to associated category.

# glimpse csv file

path_to_csv <- "dataset-master/labels.csv"

label <- read.csv(file = path_to_csv)

# Rename images like files

WBC_seprate_multiple_label <- label %>%

mutate(file_name = case_when(

Image %in% 0:9 ~ paste0("BloodImage_0000", Image),

Image %in% 10:99 ~ paste0("BloodImage_000", Image),

Image %in% 100:999 ~ paste0("BloodImage_00", Image),

TRUE ~ "huh?"

))%>%

select(file_name, Image, Category) %>%

# Separate categories in the same image

tidyr::separate_rows(Category)

# Rename differently the cropped cells from the same image

# This step is essential when we woulf join labels with annotations

WBC_seprate_multiple_label <-

WBC_seprate_multiple_label %>% group_by(file_name) %>%

mutate(new_file_name = if(n( ) > 1) {paste0(file_name,"_" , row_number( ))}

else {paste0(file_name)})

WBC_seprate_multiple_label## # A tibble: 426 x 4

## # Groups: file_name [411]

## file_name Image Category new_file_name

## <chr> <int> <chr> <chr>

## 1 BloodImage_00000 0 NEUTROPHIL BloodImage_00000

## 2 BloodImage_00001 1 NEUTROPHIL BloodImage_00001

## 3 BloodImage_00002 2 NEUTROPHIL BloodImage_00002

## 4 BloodImage_00003 3 NEUTROPHIL BloodImage_00003

## 5 BloodImage_00004 4 NEUTROPHIL BloodImage_00004

## 6 BloodImage_00005 5 NEUTROPHIL BloodImage_00005

## 7 BloodImage_00006 6 NEUTROPHIL BloodImage_00006

## 8 BloodImage_00007 7 NEUTROPHIL BloodImage_00007

## 9 BloodImage_00008 8 BASOPHIL BloodImage_00008

## 10 BloodImage_00009 9 EOSINOPHIL BloodImage_00009

## # … with 416 more rowsdplyr::glimpse(WBC_seprate_multiple_label )## Observations: 426

## Variables: 4

## Groups: file_name [411]

## $ file_name <chr> "BloodImage_00000", "BloodImage_00001", "BloodImag…

## $ Image <int> 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 10, 11, 12, 13, …

## $ Category <chr> "NEUTROPHIL", "NEUTROPHIL", "NEUTROPHIL", "NEUTROP…

## $ new_file_name <chr> "BloodImage_00000", "BloodImage_00001", "BloodImag…# glimpse image name

dplyr::glimpse(list.files("dataset-master/JPEGImages/"))## chr [1:364] "BloodImage_00000.jpg" "BloodImage_00001.jpg" ...table(WBC_seprate_multiple_label$Category)##

## BASOPHIL EOSINOPHIL LYMPHOCYTE MONOCYTE NEUTROPHIL

## 43 4 97 38 23 221- NB: there is no the same number of labels (441) and images (366)

- There is 426 - 411 images with multiple WBC

1.5.2 Explore and Transform XML file

Path_to_xml_file_9 <- "dataset-master/Annotations/BloodImage_00009.xml"

xml9 <- XML::xmlParse(Path_to_xml_file_9)

xml_df <- XML::xmlToDataFrame(Path_to_xml_file_9)

DT::datatable(xml_df) %>%

formatStyle(colnames(xml_df), color = 'black')#print(xml_df[['bndbox']])We converted an example of XML file to dataframe. We find interesting information about the x,y coordinates of cells.

bndboxcolumn show the x and y limits of the annotations. We need to extract xmin, ymin, xmax, and ymax.namecolumn indicates the type of cells.

Here the coordinate of multiple RBC are poorly represented. We observe a concatenation of 4 coordinates: xmin, xmax, ymin, ymax.

I opened multiple XML file and I did not find WBC cell with its x, y limits.

You can use extract_square_of_WBC("dataset-master/Annotations/BloodImage_00019.xml") function and change the name of the image.

The output does not have WCB sample. In actual document the code was run with the dataset download from github.

- I replaced Dataset-master/Annotations and Dataset_master/JPEGImages from github

- At first view it seems updated and xml files have WBC annotations wich is not available for kaggle dataset version

1.5.3 Function to extract x, y limits of WBC from xml files

# Extract annotation of cell as dataframe (square limits)

extract_square_of_WBC <- function(file_path){

xml_obj <- XML::xmlParse(file_path)

xml_obj_df <- bind_cols( # or cbind()

## print the name of cell

xmlToDataFrame(getNodeSet(xml_obj, "//name"),stringsAsFactors = TRUE),

# print dimension

xmlToDataFrame(getNodeSet(xml_obj, "//object/bndbox"), stringsAsFactors = FALSE)

)

xml_obj_df %>%

rename( "Cells" = text) %>%

mutate(xmin = as.numeric(xmin)) %>%

mutate(ymin = as.numeric(ymin)) %>%

mutate(xmax = as.numeric(xmax)) %>%

mutate(ymax = as.numeric(ymax)) %>%

filter(Cells == "WBC") %>%

mutate(file_name = basename(file_path))

}

extract_square_of_WBC("dataset-master/Annotations/BloodImage_00019.xml")## Cells xmin ymin xmax ymax file_name

## 1 WBC 107 97 295 267 BloodImage_00019.xml1.5.4 Group all x, y limits of WBC into dataframe

## get all squares of WBC cell for all images

Path_to_xml_file_list <- list.files("dataset-master/Annotations",full.names = TRUE)

ls_df <- lapply(Path_to_xml_file_list, function(x) extract_square_of_WBC(x))

xy_limits_WCB <- do.call("rbind",ls_df)

DT::datatable(xy_limits_WCB) %>%

formatStyle(colnames(xy_limits_WCB), color = 'black')- Mainly we have one WBC for each image BUT some image have two type of WBC. for example image 10 has two WCB (BloodImage_00010.xml).

In the next step we need to add annotation/type of each WBC. The annotation is in the dataframe named WBC_seprate_multiple_label (see above).

1.5.5 Explore only images with multiple WBC

## Explore only image with multiple WBC

only_image_multiple_WBC <-

WBC_seprate_multiple_label[duplicated(WBC_seprate_multiple_label$file_name, fromLast = FALSE) |

duplicated(WBC_seprate_multiple_label$file_name, fromLast = TRUE),]

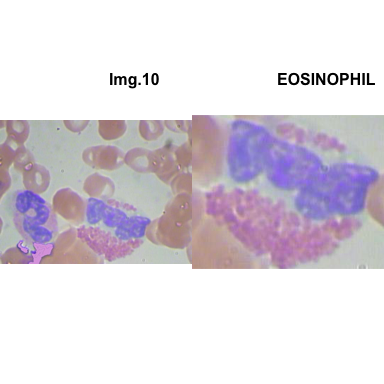

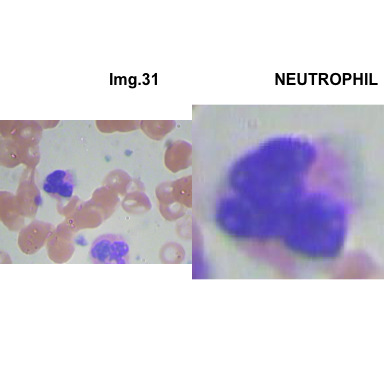

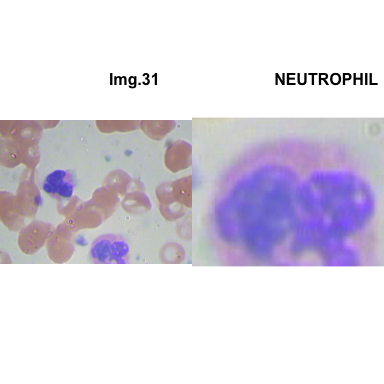

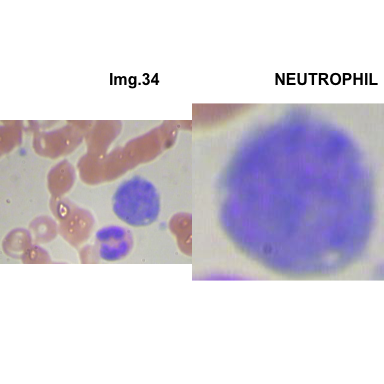

only_image_multiple_WBC## # A tibble: 30 x 4

## # Groups: file_name [15]

## file_name Image Category new_file_name

## <chr> <int> <chr> <chr>

## 1 BloodImage_00010 10 NEUTROPHIL BloodImage_00010_1

## 2 BloodImage_00010 10 EOSINOPHIL BloodImage_00010_2

## 3 BloodImage_00031 31 NEUTROPHIL BloodImage_00031_1

## 4 BloodImage_00031 31 NEUTROPHIL BloodImage_00031_2

## 5 BloodImage_00034 34 NEUTROPHIL BloodImage_00034_1

## 6 BloodImage_00034 34 BASOPHIL BloodImage_00034_2

## 7 BloodImage_00043 43 NEUTROPHIL BloodImage_00043_1

## 8 BloodImage_00043 43 MONOCYTE BloodImage_00043_2

## 9 BloodImage_00044 44 EOSINOPHIL BloodImage_00044_1

## 10 BloodImage_00044 44 EOSINOPHIL BloodImage_00044_2

## # … with 20 more rows1.5.6 Merge all needed informations of images with multiple WBC into dataframe

## Merge all needed informations of images with multiple WBC into dataframe

path_to_dataset <- "dataset-master/JPEGImages/"

crops_WBC_df <-

xy_limits_WCB %>%

mutate(file_name = gsub(".xml", "", file_name)) %>%

group_by(file_name) %>%

mutate(new_file_name = if(n( ) > 1) {paste0(file_name, "_" ,row_number( ))}

else {paste0(file_name)}) %>%

ungroup() %>%

select(-file_name) %>%

left_join(only_image_multiple_WBC , by = "new_file_name") %>%

drop_na() %>%

mutate(file_path = paste0(path_to_dataset, file_name, ".jpg"))

DT::datatable(crops_WBC_df,

options = list(

initComplete = JS(

"function(settings, json) {",

"$('body').css({'font-family': 'arial'});",

"}"

)

)) %>%

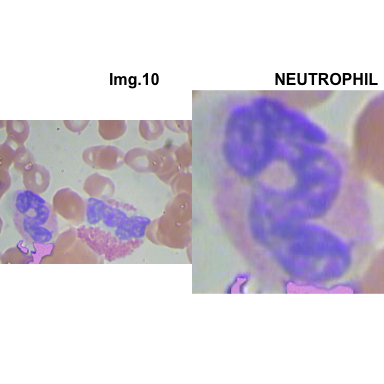

formatStyle(colnames(crops_WBC_df), color = 'black', `font-size` = '11px')1.5.7 Function to plot cropped WBC from images

# multiple plot of crops

crop_and_plot <- function(df){

l <- nrow(df)

for(i in 1:l){

attach(df)

img <- EBImage::readImage(file_path[i])

par(mfrow=c(1,2))

plot(img)

title(main= paste0("Img.",Image[i]), cex.main = 0.5, cex.sub = 0.75)

plot(img[xmin[i] : xmax[i], ymin[i] : ymax[i],])

title(main = Category[i], cex.main = 0.5, cex.sub = 0.75)

detach(df)

}

}

# l <- nrow(crops_WBC_df)

# par(mfrow = c(l,1))

crop_and_plot(crops_WBC_df)

- At this step We can avoid image with two kinds of WBC.

- We can crop all WBC and get our unicellular dataset.

Now we will crop all WBC for all images and class them to its categories.

1.5.8 Merge all needed informations of all images in dataset-master into dataframe

# we need: `xy_limits_WCB` and `WBC_seprate_multiple_label` dataframes

path_to_dataset <- "dataset-master/JPEGImages/"

all_crops_WBC_df <-

xy_limits_WCB %>%

mutate(file_name = gsub(".xml", "", file_name)) %>%

group_by(file_name) %>%

mutate(new_file_name = if(n( ) > 1) {paste0(file_name, "_" ,row_number( ))}

else {paste0(file_name)}) %>%

ungroup() %>%

select(-file_name) %>%

left_join(WBC_seprate_multiple_label , by = "new_file_name") %>%

# remove all rows with any NA value , x, y, label.

drop_na() %>%

mutate(file_path = paste0(path_to_dataset, file_name, ".jpg"))

DT::datatable(all_crops_WBC_df, editable = TRUE,

options = list(

initComplete = JS(

"function(settings, json) {",

"$('body').css({'font-family': 'arial'});",

"}"

)

)) %>%

formatStyle(colnames(all_crops_WBC_df), color = 'black', `font-size` = '11px')- In

dataset-master/labels.csvfile we showed, that the image_00117 (line 119) does not have label. After verification we annotated it asEOSINOPHIL.

1.5.9 Function used to crop WBC from images

We use a dataframe that contains all needed information : x, y limits of WBC, Category, image file path.

#path_to_dataset <- "dataset-master/JPEGImages/"

crop_and_rename <- function(df){

l <- nrow(df)

all_crops <- NULL

#attach(df)

for(i in 1:l){

img <- EBImage::readImage(df$file_path[i])

all_crops[[i]] <- img[df$xmin[i] : df$xmax[i], df$ymin[i] : df$ymax[i],]

names(all_crops)[[i]] <- paste0(df$Category[i],"_", i)

}

#detach(df)

return(all_crops)

}

all_crops <- crop_and_rename(all_crops_WBC_df)

attach(all_crops_WBC_df)

par(mfrow=c(1,4))

plot(all_crops[[2]])

title(main = Category[2], cex.main = 2, cex.sub = 0.75)

plot(all_crops[[12]])

title(main = Category[12], cex.main = 2, cex.sub = 0.75)

plot(all_crops[[22]])

title(main = Category[22], cex.main = 2, cex.sub = 0.75)

plot(all_crops[[9]])

title(main = Category[9], cex.main = 2, cex.sub = 0.75)

detach(all_crops_WBC_df)1.5.10 Glimpse of some crops

We have 368 crops for 4 types of WBC. Each crop is and imageData format used by the main R image packages.

all_crops[8:12]## $NEUTROPHIL_8

## Image

## colorMode : Color

## storage.mode : double

## dim : 195 194 3

## frames.total : 3

## frames.render: 1

##

## imageData(object)[1:5,1:6,1]

## [,1] [,2] [,3] [,4] [,5] [,6]

## [1,] 0.6784314 0.6784314 0.6745098 0.6745098 0.6745098 0.6823529

## [2,] 0.6823529 0.6784314 0.6784314 0.6745098 0.6745098 0.6862745

## [3,] 0.6823529 0.6823529 0.6823529 0.6784314 0.6784314 0.6901961

## [4,] 0.6901961 0.6862745 0.6862745 0.6862745 0.6823529 0.6941176

## [5,] 0.6941176 0.6901961 0.6901961 0.6901961 0.6901961 0.6980392

##

## $BASOPHIL_9

## Image

## colorMode : Color

## storage.mode : double

## dim : 236 199 3

## frames.total : 3

## frames.render: 1

##

## imageData(object)[1:5,1:6,1]

## [,1] [,2] [,3] [,4] [,5] [,6]

## [1,] 0.7019608 0.6901961 0.6705882 0.6705882 0.6705882 0.6627451

## [2,] 0.7098039 0.6901961 0.6823529 0.6823529 0.6784314 0.6705882

## [3,] 0.7137255 0.6980392 0.6980392 0.6980392 0.6941176 0.6862745

## [4,] 0.7215686 0.7058824 0.7058824 0.7019608 0.6980392 0.6862745

## [5,] 0.7333333 0.7215686 0.6980392 0.6980392 0.6941176 0.6823529

##

## $EOSINOPHIL_10

## Image

## colorMode : Color

## storage.mode : double

## dim : 233 287 3

## frames.total : 3

## frames.render: 1

##

## imageData(object)[1:5,1:6,1]

## [,1] [,2] [,3] [,4] [,5] [,6]

## [1,] 0.6745098 0.6745098 0.6745098 0.6745098 0.6745098 0.6745098

## [2,] 0.6705882 0.6705882 0.6705882 0.6705882 0.6705882 0.6705882

## [3,] 0.6588235 0.6588235 0.6627451 0.6627451 0.6627451 0.6588235

## [4,] 0.6549020 0.6588235 0.6627451 0.6666667 0.6666667 0.6666667

## [5,] 0.6588235 0.6627451 0.6705882 0.6745098 0.6784314 0.6784314

##

## $NEUTROPHIL_11

## Image

## colorMode : Color

## storage.mode : double

## dim : 182 193 3

## frames.total : 3

## frames.render: 1

##

## imageData(object)[1:5,1:6,1]

## [,1] [,2] [,3] [,4] [,5] [,6]

## [1,] 0.6666667 0.6784314 0.6823529 0.6823529 0.7058824 0.7098039

## [2,] 0.6666667 0.6823529 0.6901961 0.6862745 0.7058824 0.7176471

## [3,] 0.6784314 0.6980392 0.7058824 0.7098039 0.7254902 0.7294118

## [4,] 0.6862745 0.7019608 0.7137255 0.7215686 0.7450980 0.7411765

## [5,] 0.6980392 0.7176471 0.7294118 0.7372549 0.7568627 0.7568627

##

## $EOSINOPHIL_12

## Image

## colorMode : Color

## storage.mode : double

## dim : 272 218 3

## frames.total : 3

## frames.render: 1

##

## imageData(object)[1:5,1:6,1]

## [,1] [,2] [,3] [,4] [,5] [,6]

## [1,] 0.7058824 0.7019608 0.6980392 0.6980392 0.7058824 0.7058824

## [2,] 0.7058824 0.7019608 0.6980392 0.6980392 0.7019608 0.7019608

## [3,] 0.7058824 0.7058824 0.7019608 0.6980392 0.6980392 0.6941176

## [4,] 0.7058824 0.7058824 0.7019608 0.7019608 0.7019608 0.6980392

## [5,] 0.7098039 0.7058824 0.7058824 0.7058824 0.7058824 0.70196081.5.11 Dataset Augmentation

1.5.11.1 Function generates 10 images with small modification: the input is a ImageData

The crops are stored in a list as ImageData. We have 368 images for 4 WBC.

FALSE [[1]]

FALSE NULL

FALSE

FALSE [[2]]

FALSE NULL

FALSE

FALSE [[3]]

FALSE NULL

FALSE

FALSE [[4]]

FALSE NULL

FALSE

FALSE [[5]]

FALSE NULL

FALSE

FALSE [[6]]

FALSE NULL

FALSE

FALSE [[7]]

FALSE NULL

FALSE

FALSE [[8]]

FALSE NULL

FALSE

FALSE [[9]]

FALSE NULL

FALSE

FALSE [[10]]

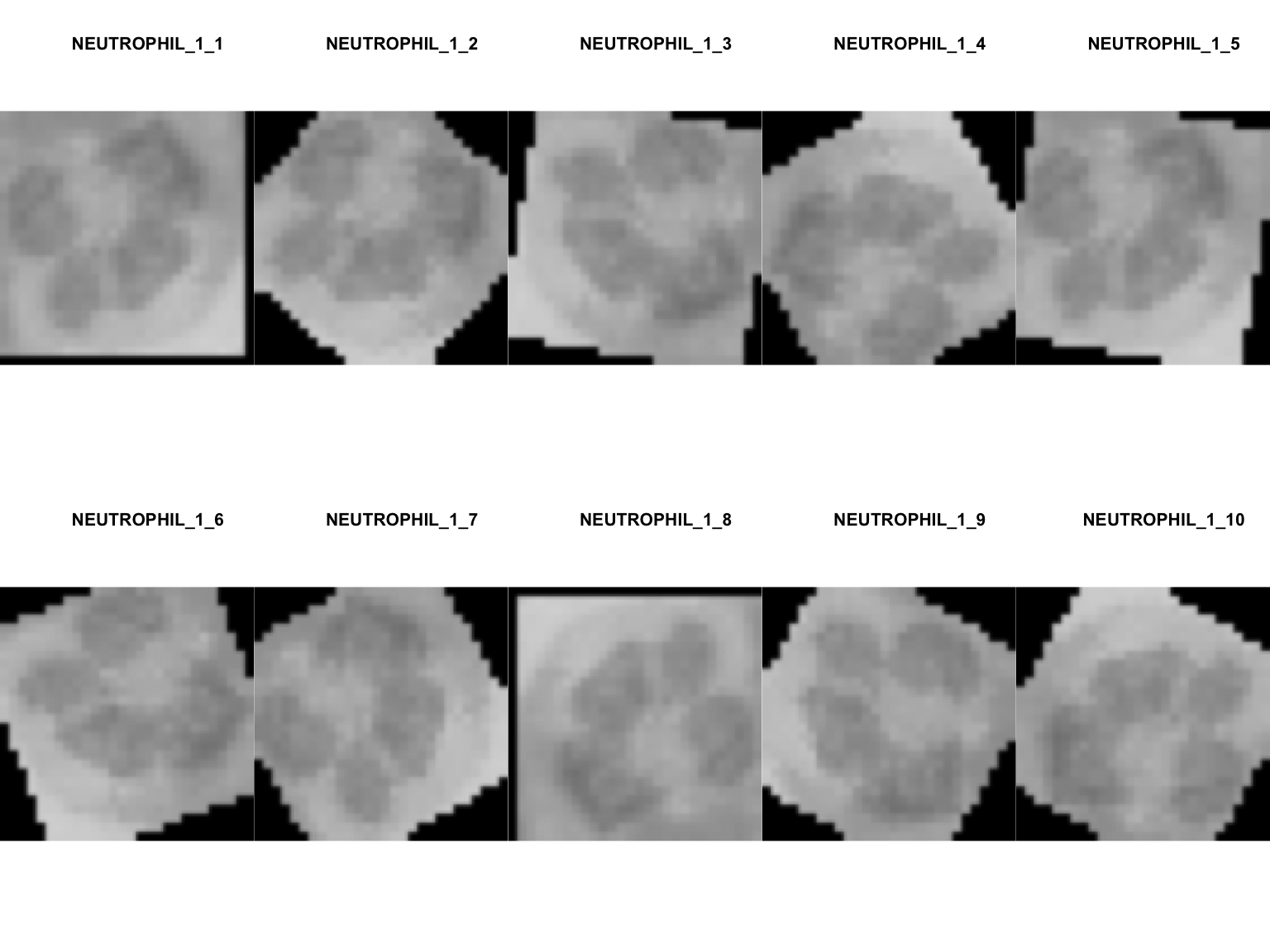

FALSE NULL1.5.11.2 Augmentation of all crops (368) of unicellular (WBC) dataset

all_crops_with_augmentation <- lapply(1:length(all_crops), function(x) img_augmentation(all_crops[x]))

names(all_crops_with_augmentation) <- lapply(1:length(all_crops), function(x) names(all_crops[x]))

str(all_crops_with_augmentation[c(1:2)]) ## List of 2

## $ NEUTROPHIL_1:List of 10

## ..$ NEUTROPHIL_1_1 : num [1:28, 1:28] 0 0 0 0 0 0 0 0 0 0 ...

## ..$ NEUTROPHIL_1_2 : num [1:28, 1:28] 0 0 0 0 0 0 0 0 0 0 ...

## ..$ NEUTROPHIL_1_3 : num [1:28, 1:28] 0.842 0.838 0.835 0.836 0.834 ...

## ..$ NEUTROPHIL_1_4 : num [1:28, 1:28] 0 0 0 0 0 ...

## ..$ NEUTROPHIL_1_5 : num [1:28, 1:28] 0 0 0 0 0 ...

## ..$ NEUTROPHIL_1_6 : num [1:28, 1:28] 0 0 0.827 0.827 0.829 ...

## ..$ NEUTROPHIL_1_7 : num [1:28, 1:28] 0 0 0 0 0 ...

## ..$ NEUTROPHIL_1_8 : num [1:28, 1:28] 0 0 0 0 0 ...

## ..$ NEUTROPHIL_1_9 : num [1:28, 1:28] 0 0 0 0 0 0 0 0 0 0 ...

## ..$ NEUTROPHIL_1_10: num [1:28, 1:28] 0 0 0 0 0 0 0 0 0 0 ...

## $ NEUTROPHIL_2:List of 10

## ..$ NEUTROPHIL_2_1 : num [1:28, 1:28] 0 0 0 0 0 0 0 0 0 0 ...

## ..$ NEUTROPHIL_2_2 : num [1:28, 1:28] 0 0 0 0.692 0.759 ...

## ..$ NEUTROPHIL_2_3 : num [1:28, 1:28] 0 0 0 0 0 ...

## ..$ NEUTROPHIL_2_4 : num [1:28, 1:28] 0 0 0 0 0 0 0 0 0 0 ...

## ..$ NEUTROPHIL_2_5 : num [1:28, 1:28] 0.821 0.813 0.819 0.822 0.82 ...

## ..$ NEUTROPHIL_2_6 : num [1:28, 1:28] 0 0 0 0 0 0 0 0 0 0 ...

## ..$ NEUTROPHIL_2_7 : num [1:28, 1:28] 0 0 0 0 0 0 0 0 0 0 ...

## ..$ NEUTROPHIL_2_8 : num [1:28, 1:28] 0 0 0 0 0 ...

## ..$ NEUTROPHIL_2_9 : num [1:28, 1:28] 0 0 0.827 0.826 0.829 ...

## ..$ NEUTROPHIL_2_10: num [1:28, 1:28] 0 0 0 0 0 ...- At this step we generated 10 x 368 images.

- The unicellular dataset is stored as a list of 368 child lists.

- In each child list there are 10 generated images.

- To plot an example from a list of list we need to specify the index of the first list, followed by the index of the name of child list (always 1) and the index of image.

1.5.12 Plot image from a list of list

# plot le third image of the second list

plot(EBImage::Image(all_crops_with_augmentation[2][[1]][[3]]))

1.5.12.1 How many crops per Category of unicellular dataset?

We will count the list of crops without augmentation. If we consider the augmentation preprocessing, we could multiply the size by 10.

neutrophil_dataset_idx <- sapply(names(all_crops_with_augmentation),

function(vec) {grepl("neutrophil", vec, ignore.case=TRUE)})

basophil_dataset_idx <- sapply(names(all_crops_with_augmentation),

function(vec) {grepl("basophil", vec, ignore.case=TRUE)})

eosinophil_dataset_idx <- sapply(names(all_crops_with_augmentation),

function(vec) {grepl("eosinophil", vec, ignore.case=TRUE)})

lymphocyte_dataset_idx <- sapply(names(all_crops_with_augmentation),

function(vec) {grepl("lymphocyte", vec, ignore.case=TRUE)})

monocyte_dataset_idx <- sapply(names(all_crops_with_augmentation),

function(vec) {grepl("monocyte", vec, ignore.case=TRUE)})

data.frame(

neutrophil = length(all_crops_with_augmentation[neutrophil_dataset_idx]),

basophil = length(all_crops_with_augmentation[basophil_dataset_idx]),

eosinophil = length(all_crops_with_augmentation[eosinophil_dataset_idx]),

lymphocyte = length(all_crops_with_augmentation[lymphocyte_dataset_idx]),

monocyte = length(all_crops_with_augmentation[monocyte_dataset_idx])

)## neutrophil basophil eosinophil lymphocyte monocyte

## 1 215 3 90 38 22- The dataset has only 30 images from basophil WBC.

1.5.12.2 Random sampling, Split Testing and Training datasets

basophil_dataset <- all_crops_with_augmentation[basophil_dataset_idx]

eosinophil_dataset <- all_crops_with_augmentation[eosinophil_dataset_idx]

neutrophil_dataset <- all_crops_with_augmentation[neutrophil_dataset_idx]

lymphocyte_dataset <- all_crops_with_augmentation[lymphocyte_dataset_idx]

monocyte_dataset <- all_crops_with_augmentation[monocyte_dataset_idx]

# Random sampling

#str(lapply(basophil_dataset, function(x) {sample(x,3)}))

## generate index for samling

test_idx <- sample(seq(1,10), 3, replace = FALSE)

train_idx <- seq(1,10)[-test_idx]

# Fixed index sampling to avoid redoncy between traing and testing datasets

eosinophil_dataset_test <- lapply(eosinophil_dataset, "[", test_idx)

neutrophil_dataset_test <- lapply(neutrophil_dataset, "[", test_idx)

basophil_dataset_test <- lapply(basophil_dataset, "[", test_idx)

lymphocyte_dataset_test <- lapply(lymphocyte_dataset, "[", test_idx)

monocyte_dataset_test <- lapply(monocyte_dataset, "[", test_idx)

eosinophil_dataset_train <- lapply(eosinophil_dataset, "[", train_idx)

neutrophil_dataset_train <- lapply(neutrophil_dataset, "[", train_idx)

basophil_dataset_train <- lapply(basophil_dataset, "[", train_idx)

lymphocyte_dataset_train <- lapply(lymphocyte_dataset, "[", train_idx)

monocyte_dataset_train <- lapply(monocyte_dataset, "[", train_idx)

# sample_size <- c(1, 1, 2)

#

# selected_vals <- purrr::map2(

# .x = my_list_of_lists,

# .y = number_to_select,

# .f = function(x,y) base::sample(x, y)

# )1.5.12.3 Merge Training lists and testing lists (loop is very slow!)

## do.call also works but generate cancatenated names of matrix LIST.list.

#testing_dataset <- do.call(c,c(basophil_dataset_test, eosinophil_dataset_test, neutrophil_dataset_test, lymphocyte_datase_test))

test_dataset_list <- purrr::flatten(c(basophil_dataset_test, eosinophil_dataset_test,

neutrophil_dataset_test, lymphocyte_dataset_test, monocyte_dataset_test))

train_dataset_list <- purrr::flatten(c(basophil_dataset_train, eosinophil_dataset_train,

neutrophil_dataset_train, lymphocyte_dataset_train, monocyte_dataset_train))

#ls1 <- purrr::flatten(basophil_dataset_test)

# This fonction takes a list of images (matrix) and convert them to a vector into dataframe.

# This function can resize image before storage

img2vec_slow <- function(list, w = 28, h = 28){

df <- data.frame(matrix(ncol = (w * h), nrow = 0))

# Set names. The first column is the labels, the other columns are the pixels.

colnames(df) <- paste("pixel", c(1:(w*h) ))

for(k in 1: length(list)){

# resize image if needed

EBImage::resize(list[[k]], w = w, h = h)

# Coerce matrix to a vector

img_vector <- as.vector(list[[k]])

# add avector to a row

df[nrow(df) + 1,] <- img_vector

}

# add rownames with labels

rownames(df) <- names(list)

return(df)

}

#train_dataset_df <- img2vec_slow(train_dataset_list, w=180, h=180)

#test_dataset_df <- img2vec_slow(test_dataset_list, w = 180, h = 180)

#ptm <- proc.time()

#res <- img2vec_slow(ls1)

#print(proc.time() - ptm)- loop is very slow process

- Try to avoid lood and use

sapply

1.5.12.4 Merge Training lists and testing lists (sapply is very speed!)

f <- function(x, w = w, h = h){

# resize image if needed

EBImage::resize(x, w = w, h = h)

# Coerce matrix to a vector

img_vector <- as.vector(x)

# add avector to a row

# df[nrow(df) + 1,] <- img_vector

return(img_vector)

}

img2vec <- function(list, w = 180, h = 180){

df <- data.frame(matrix(ncol = (w * h), nrow = 0))

df <- sapply(list, f, w, h)

# Set names. The first column is the labels, the other columns are the pixels.

#colnames(df) <- paste("pixel", c(1:(w * h) ))

return(as.data.frame(t(df)))

}

train_dataset_df <- img2vec(train_dataset_list, w=28, h=28)

test_dataset_df <- img2vec(test_dataset_list, w = 28, h = 28)

ls1 <- purrr::flatten(basophil_dataset_test)

ptm <- proc.time()

res <- img2vec(ls1)

print(proc.time() - ptm)## user system elapsed

## 0.149 0.003 0.1541.5.12.5 glimpse of train and test datasets

test_dataset_df[7:20, 1:9]## V1 V2 V3 V4 V5 V6 V7

## BASOPHIL_119_5 0 0 0.0000000 0.5905709 0.5921147 0.6003855 0.6256655

## BASOPHIL_119_8 0 0 0.0000000 0.0000000 0.0000000 0.0000000 0.0000000

## BASOPHIL_119_3 0 0 0.0000000 0.0000000 0.0000000 0.0000000 0.0000000

## EOSINOPHIL_10_5 0 0 0.7002830 0.7069401 0.7443731 0.7305701 0.6621229

## EOSINOPHIL_10_8 0 0 0.0000000 0.0000000 0.0000000 0.0000000 0.6698928

## EOSINOPHIL_10_3 0 0 0.0000000 0.0000000 0.0000000 0.0000000 0.0000000

## EOSINOPHIL_12_5 0 0 0.0000000 0.0000000 0.0000000 0.0000000 0.0000000

## EOSINOPHIL_12_8 0 0 0.0000000 0.0000000 0.0000000 0.0000000 0.0000000

## EOSINOPHIL_12_3 0 0 0.6866380 0.6826481 0.6887495 0.6928559 0.6901238

## EOSINOPHIL_19_5 0 0 0.0000000 0.0000000 0.0000000 0.0000000 0.0000000

## EOSINOPHIL_19_8 0 0 0.6793549 0.7764730 0.8054193 0.8007864 0.7960733

## EOSINOPHIL_19_3 0 0 0.0000000 0.0000000 0.0000000 0.0000000 0.0000000

## EOSINOPHIL_25_5 0 0 0.0000000 0.7957277 0.7975393 0.7948215 0.7920819

## EOSINOPHIL_25_8 0 0 0.0000000 0.0000000 0.0000000 0.0000000 0.0000000

## V8 V9

## BASOPHIL_119_5 0.7496248 0.7761420

## BASOPHIL_119_8 0.0000000 0.0000000

## BASOPHIL_119_3 0.7599528 0.7633519

## EOSINOPHIL_10_5 0.7073716 0.7150666

## EOSINOPHIL_10_8 0.6603926 0.6443599

## EOSINOPHIL_10_3 0.0000000 0.0000000

## EOSINOPHIL_12_5 0.7077450 0.7246248

## EOSINOPHIL_12_8 0.0000000 0.0000000

## EOSINOPHIL_12_3 0.6938686 0.7115734

## EOSINOPHIL_19_5 0.0000000 0.0000000

## EOSINOPHIL_19_8 0.7880693 0.7874461

## EOSINOPHIL_19_3 0.0000000 0.0000000

## EOSINOPHIL_25_5 0.7911085 0.7888322

## EOSINOPHIL_25_8 0.0000000 0.00000001.5.12.6 Plot image from dataframe (testing or training Datastes)

vec2img <- function(df, nrow, w= 180, h = 180, main = "if needed", xlab = "if needed"){

i <- EBImage::Image(as.numeric(df[nrow,]))

dim(i) <- c(w, h, 1)

#i <- EBImage::resize(i, w= w, h= h)

plot(i)

title(main = main, xlab = xlab ,cex.main = 1, cex.sub = 0.75)

}

vec2img(test_dataset_df, 212, 28, 28)

1.5.13 Deep learning using mxnet Package

1.5.13.1 Formating datasets to arrays

train_dataset_df <- img2vec(train_dataset_list, w=28, h=28)

test_dataset_df <- img2vec(test_dataset_list, w = 28, h = 28)

## It is the fast way to transform rownames

tmp_rowname_test <- rownames(test_dataset_df)

labels <- stringr::str_extract(tmp_rowname_test, "\\w+[^_\\d]")

rownames(test_dataset_df) <- NULL

Test <- cbind(labels, test_dataset_df)

tmp_rowname_train <- rownames(train_dataset_df)

labels <- stringr::str_extract(tmp_rowname_train, "\\w+[^_\\d]")

rownames(train_dataset_df) <- NULL

Train <- cbind(labels, train_dataset_df)

#Set up train and test arrays

train <- data.matrix(Train)

train_x <- t(train[, -1])

train_y <- train[, 1]

train_array <- train_x

dim(train_array) <- c(28, 28, 1, ncol(train_x))

test <- data.matrix(Test)

test_x <- t(test[, -1])

test_y <- test[, 1]

test_array <- test_x

dim(test_array) <- c(28, 28, 1, ncol(test_x))

table(train[,1])##

## 1 2 3 4 5

## 21 630 266 154 1505- The classes are converted to number with

- ** 1 == Basophil **

- ** 2 == Eosinophil **

- ** 3 == Lymphocite **

- ** 4 == Monocyte **

- ** 5 == neutrophil **

1.5.14 Set training parameters

require(mxnet)

data <- mx.symbol.Variable('data')

# 1st convolutional layer

conv_1 <- mx.symbol.Convolution(data = data, kernel = c(5, 5), num_filter = 20)

tanh_1 <- mx.symbol.Activation(data = conv_1, act_type = "tanh")

pool_1 <- mx.symbol.Pooling(data = tanh_1, pool_type = "max", kernel = c(2, 2), stride = c(2, 2))

# 2nd convolutional layer

conv_2 <- mx.symbol.Convolution(data = pool_1, kernel = c(5, 5), num_filter = 50)

tanh_2 <- mx.symbol.Activation(data = conv_2, act_type = "tanh")

pool_2 <- mx.symbol.Pooling(data=tanh_2, pool_type = "max", kernel = c(2, 2), stride = c(2, 2))

# 1st fully connected layer

flatten <- mx.symbol.Flatten(data = pool_2)

fc_1 <- mx.symbol.FullyConnected(data = flatten, num_hidden = 500)

tanh_3 <- mx.symbol.Activation(data = fc_1, act_type = "tanh")

# 2nd fully connected layer

fc_2 <- mx.symbol.FullyConnected(data = tanh_3, num_hidden = 40)

# Output. Softmax output since we'd like to get some probabilities.

NN_model <- mx.symbol.SoftmaxOutput(data = fc_2)1.5.15 Built a Model

# Pre-training set up

#-------------------------------------------------------------------------------

# Set seed for reproducibility

mx.set.seed(100)

# Device used. CPU in my case.

devices <- mx.cpu()

# Training

#-------------------------------------------------------------------------------

ptm <- proc.time()

# Train the model

model <- mx.model.FeedForward.create(symbol = NN_model, # The network schema

X = train_array, # Training array

y = train_y, # Labels/classes of training dataset

ctx = devices,

num.round = 150,

array.batch.size = 20, # number of array in the batch size

learning.rate = 0.02,

momentum = 0.9,

optimizer = "sgd",

eval.metric = mx.metric.accuracy,

#initializer=mx.init.uniform(0.05),

epoch.end.callback = mx.callback.log.train.metric(100))## Start training with 1 devices## [1] Train-accuracy=0.559689925969109## [2] Train-accuracy=0.58023256213628## [3] Train-accuracy=0.580620158665864## [4] Train-accuracy=0.581395352302596## [5] Train-accuracy=0.58410853078199## [6] Train-accuracy=0.58410853078199## [7] Train-accuracy=0.58410853078199## [8] Train-accuracy=0.58410853078199## [9] Train-accuracy=0.58410853078199## [10] Train-accuracy=0.58410853078199## [11] Train-accuracy=0.583720934252406## [12] Train-accuracy=0.590697677791581## [13] Train-accuracy=0.602325585230376## [14] Train-accuracy=0.612403104009554## [15] Train-accuracy=0.631007754063421## [16] Train-accuracy=0.678682166707608## [17] Train-accuracy=0.682170544483865## [18] Train-accuracy=0.697674417911574## [19] Train-accuracy=0.729457362908726## [20] Train-accuracy=0.739922482375951## [21] Train-accuracy=0.746511630771696## [22] Train-accuracy=0.762403103270272## [23] Train-accuracy=0.772480622511502## [24] Train-accuracy=0.791860468165819## [25] Train-accuracy=0.792635662611141## [26] Train-accuracy=0.799612403378006## [27] Train-accuracy=0.802713182083396## [28] Train-accuracy=0.812015504800072## [29] Train-accuracy=0.818217055742131## [30] Train-accuracy=0.825193802053614## [31] Train-accuracy=0.828682174054227## [32] Train-accuracy=0.86395348735558## [33] Train-accuracy=0.865503874860069## [34] Train-accuracy=0.882558137409447## [35] Train-accuracy=0.880232553149379## [36] Train-accuracy=0.885271314040635## [37] Train-accuracy=0.908139530078385## [38] Train-accuracy=0.917441854643267## [39] Train-accuracy=0.927131777123887## [40] Train-accuracy=0.908527127532072## [41] Train-accuracy=0.932558134082676## [42] Train-accuracy=0.922868209291798## [43] Train-accuracy=0.927131776661836## [44] Train-accuracy=0.922093016232631## [45] Train-accuracy=0.953100768170616## [46] Train-accuracy=0.95581394665001## [47] Train-accuracy=0.951937979043916## [48] Train-accuracy=0.955813947112061## [49] Train-accuracy=0.955426349658375## [50] Train-accuracy=0.968604644139608## [51] Train-accuracy=0.975581390913143## [52] Train-accuracy=0.970930227475573## [53] Train-accuracy=0.977131778417632## [54] Train-accuracy=0.984108523805012## [55] Train-accuracy=0.994573642117109## [56] Train-accuracy=0.998837209025095## [57] Train-accuracy=0.999612403008365## [58] Train-accuracy=1## [59] Train-accuracy=1## [60] Train-accuracy=1## [61] Train-accuracy=1## [62] Train-accuracy=1## [63] Train-accuracy=1## [64] Train-accuracy=1## [65] Train-accuracy=1## [66] Train-accuracy=1## [67] Train-accuracy=1## [68] Train-accuracy=1## [69] Train-accuracy=1## [70] Train-accuracy=1## [71] Train-accuracy=1## [72] Train-accuracy=1## [73] Train-accuracy=1## [74] Train-accuracy=1## [75] Train-accuracy=1## [76] Train-accuracy=1## [77] Train-accuracy=1## [78] Train-accuracy=1## [79] Train-accuracy=1## [80] Train-accuracy=1## [81] Train-accuracy=1## [82] Train-accuracy=1## [83] Train-accuracy=1## [84] Train-accuracy=1## [85] Train-accuracy=1## [86] Train-accuracy=1## [87] Train-accuracy=1## [88] Train-accuracy=1## [89] Train-accuracy=1## [90] Train-accuracy=1## [91] Train-accuracy=1## [92] Train-accuracy=1## [93] Train-accuracy=1## [94] Train-accuracy=1## [95] Train-accuracy=1## [96] Train-accuracy=1## [97] Train-accuracy=1## [98] Train-accuracy=1## [99] Train-accuracy=1## [100] Train-accuracy=1## [101] Train-accuracy=1## [102] Train-accuracy=1## [103] Train-accuracy=1## [104] Train-accuracy=1## [105] Train-accuracy=1## [106] Train-accuracy=1## [107] Train-accuracy=1## [108] Train-accuracy=1## [109] Train-accuracy=1## [110] Train-accuracy=1## [111] Train-accuracy=1## [112] Train-accuracy=1## [113] Train-accuracy=1## [114] Train-accuracy=1## [115] Train-accuracy=1## [116] Train-accuracy=1## [117] Train-accuracy=1## [118] Train-accuracy=1## [119] Train-accuracy=1## [120] Train-accuracy=1## [121] Train-accuracy=1## [122] Train-accuracy=1## [123] Train-accuracy=1## [124] Train-accuracy=1## [125] Train-accuracy=1## [126] Train-accuracy=1## [127] Train-accuracy=1## [128] Train-accuracy=1## [129] Train-accuracy=1## [130] Train-accuracy=1## [131] Train-accuracy=1## [132] Train-accuracy=1## [133] Train-accuracy=1## [134] Train-accuracy=1## [135] Train-accuracy=1## [136] Train-accuracy=1## [137] Train-accuracy=1## [138] Train-accuracy=1## [139] Train-accuracy=1## [140] Train-accuracy=1## [141] Train-accuracy=1## [142] Train-accuracy=1## [143] Train-accuracy=1## [144] Train-accuracy=1## [145] Train-accuracy=1## [146] Train-accuracy=1## [147] Train-accuracy=1## [148] Train-accuracy=1## [149] Train-accuracy=1## [150] Train-accuracy=1print(proc.time() - ptm)## user system elapsed

## 850.373 220.489 705.7441.5.16 Summary of the model

summarymxnet <- summary(model$arg.params)

data.frame(do.call(rbind, list(summarymxnet))) %>%

tibble::rownames_to_column("layers")## layers Length Class Mode

## 1 convolution0_weight 500 MXNDArray externalptr

## 2 convolution0_bias 20 MXNDArray externalptr

## 3 convolution1_weight 25000 MXNDArray externalptr

## 4 convolution1_bias 50 MXNDArray externalptr

## 5 fullyconnected0_weight 400000 MXNDArray externalptr

## 6 fullyconnected0_bias 500 MXNDArray externalptr

## 7 fullyconnected1_weight 20000 MXNDArray externalptr

## 8 fullyconnected1_bias 40 MXNDArray externalptr1.5.17 Test Model

#Predict labels

predicted <- predict(model, test_array)

predicted <- mxnet:::predict.MXFeedForwardModel(model = model, X = test_array)

# Assign labels

predicted_labels <- max.col(t(predicted)) -1

# Get accuracy

table(test_y, predicted_labels) ## predicted_labels

## test_y 1 2 3 4 5

## 1 0 3 2 1 3

## 2 2 178 7 5 78

## 3 0 6 98 5 5

## 4 0 14 8 25 19

## 5 1 60 7 9 568# remember the size of images used for testing

table(test[,1])##

## 1 2 3 4 5

## 9 270 114 66 645- Category

1corresponding toBasophilcategory seems to have the worst rate. The model was identify 1/9 image. - It is expected because the datasets of basophil is samll.

- Eosinophi 163/270

- Lymphocyte 103/114

- Neutrophil 575/645

- The model was predict 95 False Positive (FP) Neutrophil and 65 FP Eosinophil.

1.5.18 Compute the means of identical labels, located in the diagonal

## get means of identical labels, located in the diagonal

sum(diag(table(test_y,predicted_labels)))/length(test_y)## [1] 0.7871377- We identify 81% of the test dataset images

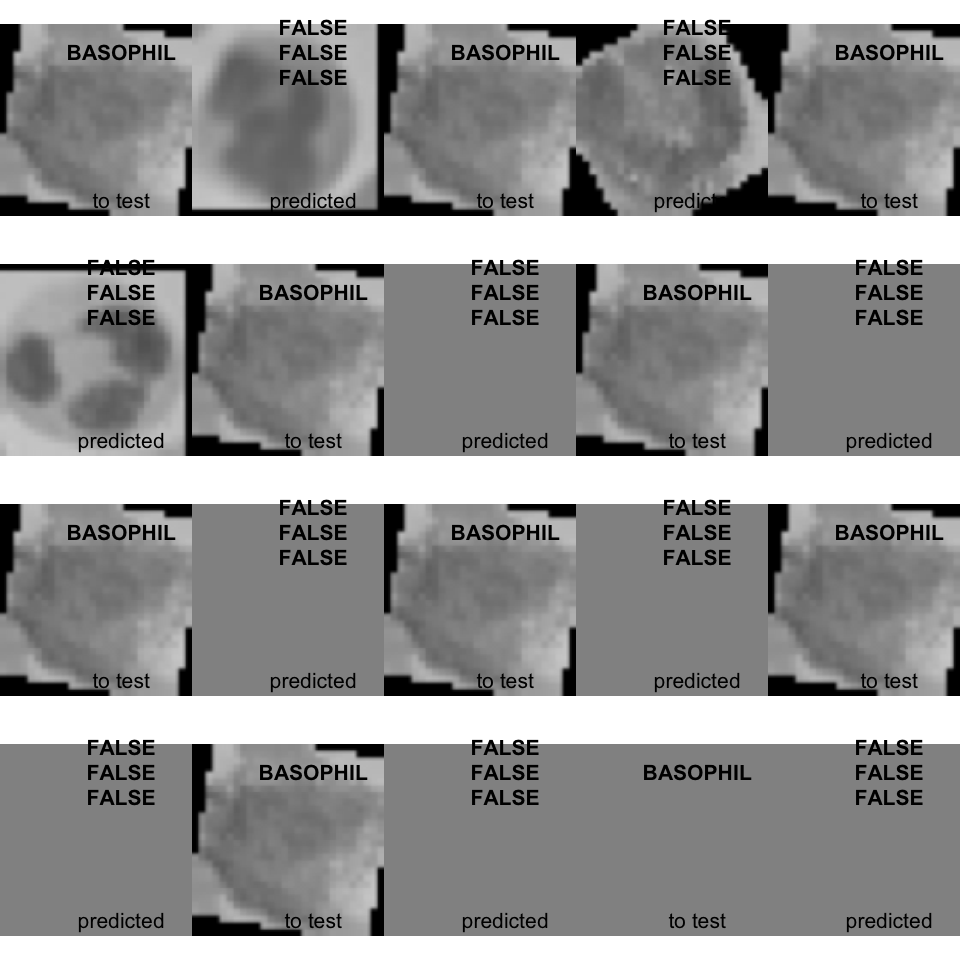

1.5.19 Visualize predicted class and compare them to original class from testing dataset

# class arg could be: "BASPPHIL", "NEUTROPHIL", "LYMPHOCYTE", "EOSINOPHIL"

# n_img is the number of image would you want to plot.

# If could be the sum of predicted images and image for testing for one class.

plot_result <- function(class = "BASOPHIL", n_img = 10){

par(mfrow=c(4,5))

lapply(1:n_img, function(i){

## generate index for each class/folder

key0 <- strsplit(unique(labels), ",")

df_index <- data.frame(index = rep(seq_along(key0), sapply(key0, length)), ID = unlist(key0))

idx <- df_index[which(df_index[,2] == class),1]

## we would like to select classes 3

selected_vec_test <- test_y[test_y == idx]

selected_vec_predicted <- which(predicted_labels == idx)

vec2img(Test[selected_vec_test,][,-1],i,w= 28, h= 28, main = class, xlab = "to test")

vec2img(Test[selected_vec_predicted,][,-1],i,w= 28, h= 28, main = paste(selected_vec_predicted %in% selected_vec_test), xlab = "predicted")

})

}

plot_result(class = "BASOPHIL", 10)

## [[1]]

## NULL

##

## [[2]]

## NULL

##

## [[3]]

## NULL

##

## [[4]]

## NULL

##

## [[5]]

## NULL

##

## [[6]]

## NULL

##

## [[7]]

## NULL

##

## [[8]]

## NULL

##

## [[9]]

## NULL

##

## [[10]]

## NULL- The unique predicted basophil is FALSE, Hahahaha :-)

1.6 Description of the second dataset

The second dataset directory named dataset2-master contains image folder for each class of WBC and csv file that label images.